Transformer models have completely changed how computers understand human language. These powerful neural network architectures are the foundation of modern applications like ChatGPT, Google Translate, and advanced computer vision applications.

A transformer model processes sequential data using self-attention mechanisms instead of traditional methods. This approach handles long-range dependencies in text much better through parallel processing.

Google researchers introduced the transformer architecture in 2017 with their paper “Attention Is All You Need.” This breakthrough sparked major advances in natural language processing and pattern recognition.

How Does Transformer Architecture Work? (Step-by-Step Explanation)

The transformer processes information through interconnected layers. The core uses attention weights to focus on relevant parts of the input sequence.

Input text gets converted into token sequences through tokenization process. Each token becomes vector embeddings that capture semantic meaning.

Positional encoding gets added to help understand word order. This step matters because transformers don’t naturally process sequential information.

Multiple attention layers calculate relationships between different tokens. Each layer uses multi-head attention to capture various relationship types simultaneously.

Processed information flows through feature extraction layers that generate final output. This could be translated text, generated content, or classification results.

READ THIS BLOG : Pâtisserie vs Boulangerie vs Viennoiserie: Complete Guide to French Bakery Types 2025

Self-Attention Mechanism in Transformers: Complete Guide 2025

Self-attention enables transformers to understand relationships between words in sentences. This mechanism focuses on relevant input parts when processing each token.

The process works through query-key-value calculations. Each input token generates three vectors: query, key, and value. The model computes attention scores by comparing queries with keys.

These scores determine focus levels for each token. The softmax function normalizes scores to create probability distributions that guide attention.

Self-supervised learning helps transformers learn attention patterns from text data without explicit labels. Models discover language patterns through exposure to diverse training datasets.

Self-attention handles long-range dependencies efficiently. Unlike traditional models that struggle with distant relationships, transformers connect words across entire documents.

Transformer Model Components: Encoder, Decoder & Attention Layers

The transformer has two main parts: encoders and decoders. Each component serves specific purposes in processing and generating information.

Encoder blocks process input sequences and create rich data representations. These blocks contain multi-head attention layers followed by feed-forward networks.

Decoder blocks generate output sequences based on encoder representations. Decoders use masked attention to only look at previous tokens during generation.

Attention layers connect encoders and decoders. This allows focus on relevant input parts when generating each output token.

Each component uses residual connections and layer normalization for stable training. These choices help with backpropagation and improve performance.

What is Multi-Head Attention? (With Simple Examples)

Multi-head attention lets transformer models focus on different relationship types simultaneously. Instead of single attention, transformers split attention into multiple “heads” that capture various patterns.

Each attention head learns different input aspects. One head might focus on syntactic relationships while another captures semantic meaning.

Consider “The cat sat on the mat.” One head might focus on subject-verb relationship between “cat” and “sat,” while another examines spatial relationship from “on the mat.”

The model combines outputs from all attention heads for comprehensive understanding. This fusion happens through learned linear transformations that weight each head’s contribution.

Multi-head attention significantly improves performance on complex tasks like question answering and named entity recognition.

Transformer vs RNN vs CNN: Which is Better for Applications?

Transformers outperform traditional Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) in many applications.

RNNs process sequences step by step, making them naturally suited for sequential data but slow during training. They struggle with long-range dependencies.

CNNs excel at processing grid-like data such as images through convolutional layers. They work well for computer vision tasks but less effectively for natural language processing.

Transformers use parallel processing to handle entire sequences simultaneously, making them much faster to train. Their attention mechanisms capture long-range dependencies better.

The choice depends on specific tasks. Transformers dominate text generation and language modeling, while CNNs remain strong for image processing.

Popular Transformer Models: GPT, BERT, T5 Comparison

GPT (Generative Pre-trained Transformer) models focus on autoregressive text generation. They predict the next token in sequences and excel at creative writing and conversational applications.

BERT (Bidirectional Encoder Representations from Transformers) uses bidirectional processing to understand context from both directions. This makes BERT excellent for text classification and sentiment analysis.

T5 (Text-to-Text Transfer Transformer) treats all NLP tasks as text-to-text problems. This unified approach makes T5 versatile for machine translation and text summarization.

Each model uses different training strategies. GPT models use decoder-only architectures, while BERT employs encoder-only designs. T5 combines both for maximum flexibility.

The choice depends on specific needs. GPT excels at generation tasks, BERT handles understanding tasks well, and T5 provides versatility across multiple applications.

How to Train a Transformer Model: Best Practices 2025?

Training transformer models requires careful consideration of model parameters, training datasets, and computational resources. Modern transformers contain millions of parameters that need optimization through gradient descent.

Data preprocessing is crucial for successful training. This includes tokenization, sequence padding, and data augmentation techniques. High-quality training datasets significantly impact final performance.

GPU acceleration is essential for training large transformer models efficiently. Modern training uses distributed computing across multiple GPUs to handle computational demands.

Learning rate scheduling and regularization techniques prevent overfitting and ensure stable training. Gradient clipping and weight decay help manage the optimization process.

Model checkpointing and validation monitoring are critical for tracking training progress. Regular evaluation on held-out data helps prevent overfitting.

Transformer Applications: NLP, Computer Vision & Beyond

Transformers have expanded beyond natural language processing into computer vision, audio processing, and multimodal applications. Their versatility makes them suitable for diverse pattern recognition tasks.

Vision Transformers (ViTs) apply transformer architecture to image classification and object detection. They process images as sequences of patches, achieving competitive performance.

Audio transformers handle speech recognition, music generation, and audio classification tasks. They can process spectrograms and raw audio waveforms effectively.

Multimodal transformers combine text, images, and other data types for tasks like image captioning and visual question answering.

Scientific applications include protein folding prediction, drug discovery, and climate modeling. Transformers’ ability to model complex relationships makes them valuable for research applications.

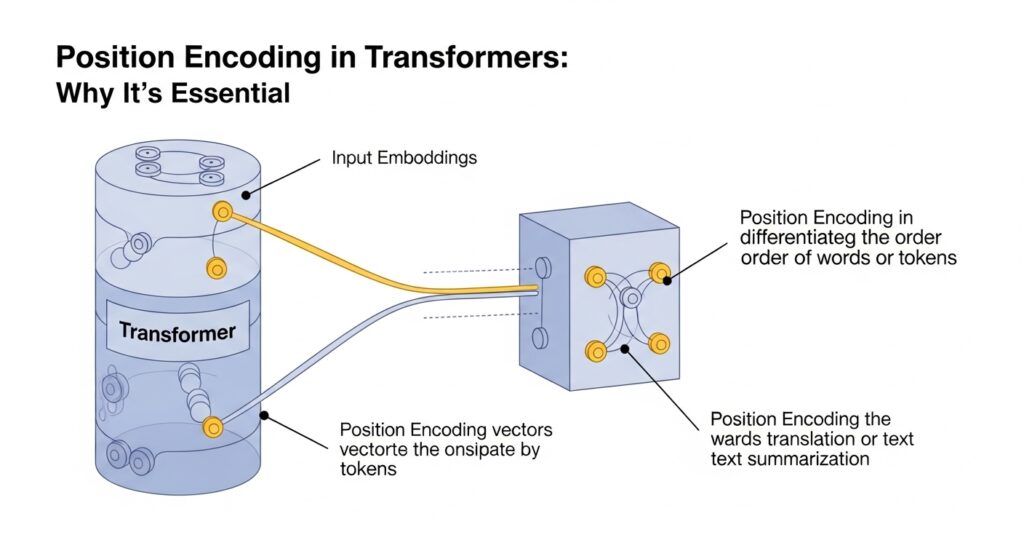

Position Encoding in Transformers: Why It’s Essential

Positional encoding provides transformers with information about token order and sequence structure. Without this component, transformers would treat input as unordered token sets.

Sinusoidal encodings use mathematical functions to create unique position representations for each token position. These encodings help models understand sequential relationships and word order.

Learned positional embeddings allow models to discover optimal position representations during training. This approach adapts to specific tasks and sequence lengths more effectively.

Relative position encoding focuses on relationships between tokens rather than absolute positions. This approach helps models generalize to longer sequences.

The choice of positional encoding affects model performance on tasks requiring sequential understanding. Proper position encoding is crucial for machine translation and text summarization.

READ THIS BLOG: 14 Traditional French Christmas Desserts That Will Impress Your Guests

Transformer Model Advantages & Limitations Explained

Transformers offer significant advantages over traditional machine learning algorithms. Their parallel processing capabilities enable faster training and better computational efficiency.

Scalability is a major strength of transformer architecture. These models handle varying sequence lengths and batch sizes effectively. Transfer learning allows pre-trained models to adapt to new tasks with minimal additional training.

Interpretability through attention visualizations helps understand model decisions. Attention weights provide insights into which input parts the model considers important.

However, transformers have limitations including high computational requirements and memory consumption. Inference time can be slow for very large models without GPU acceleration.

Context window limitations restrict the amount of text transformers can process simultaneously. Long-range dependencies beyond the context window remain challenging.

Frequently Asked question

How do transformers differ from traditional neural networks?

Transformers use attention mechanisms instead of recurrent connections, enabling parallel processing and better handling of long-range dependencies in sequential data.

What makes self-attention so powerful in transformers?

Self-attention allows models to focus on relevant parts of the input sequence simultaneously, capturing complex relationships between tokens regardless of their distance in the sequence.

Can transformers work for tasks beyond natural language processing?

Yes, transformers have been successfully adapted for computer vision, audio processing, and multimodal applications, demonstrating their versatility across different domains.

How much computational power do transformers require?

Transformers require significant computational resources, especially for training large models. GPU acceleration and distributed computing are typically necessary for practical applications.

What are the main challenges in training transformer models?

Key challenges include high computational costs, large memory requirements, data quality demands, and the need for careful hyperparameter tuning and regularization.

Conclusion

Transformer models represent a fundamental breakthrough in machine learning. Their self-attention mechanisms and parallel processing capabilities have revolutionized natural language processing and expanded into computer vision and beyond.

Understanding transformer architecture, multi-head attention, and positional encoding is crucial for anyone working with modern systems. These models power today’s most advanced language models and deep learning applications.